Audio

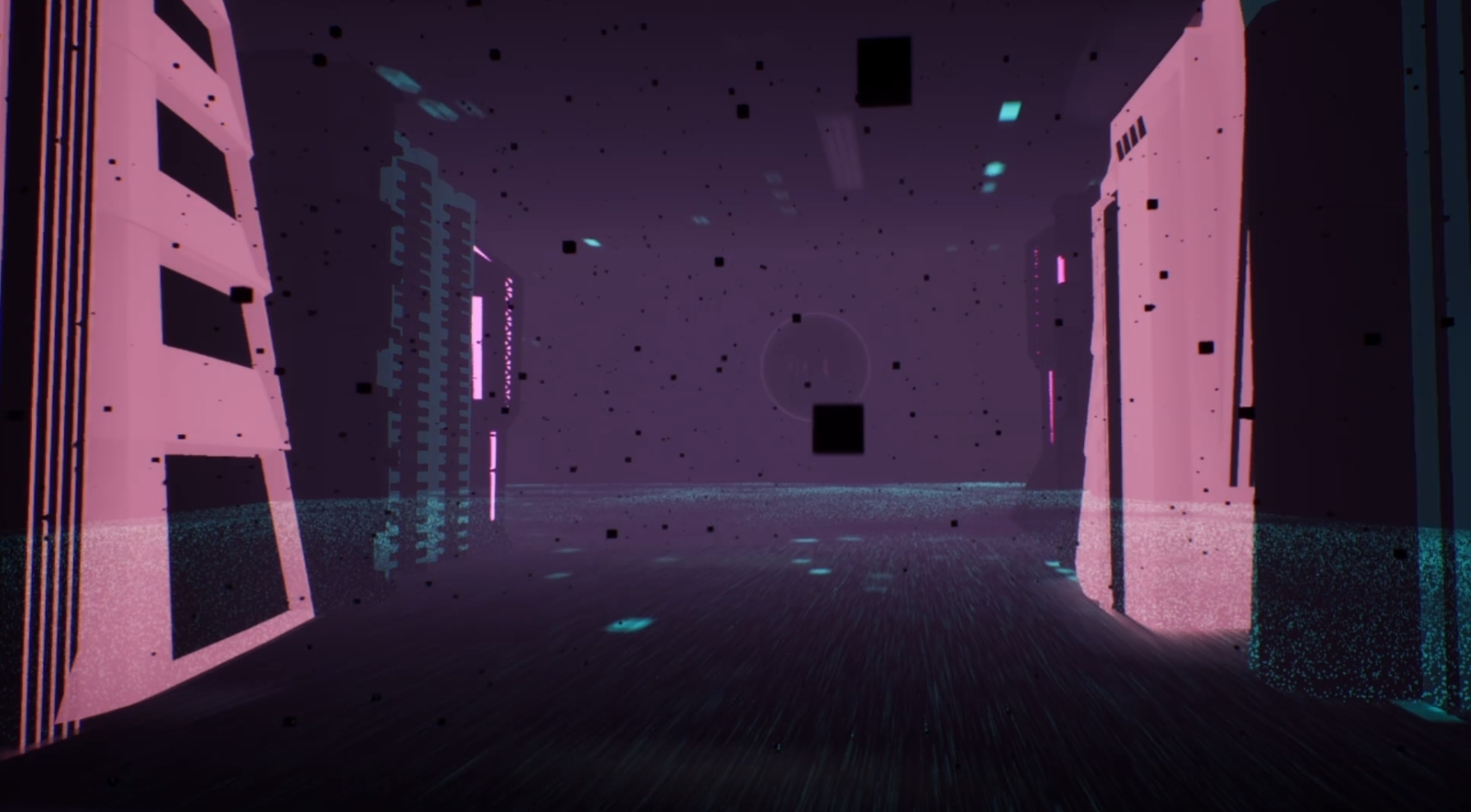

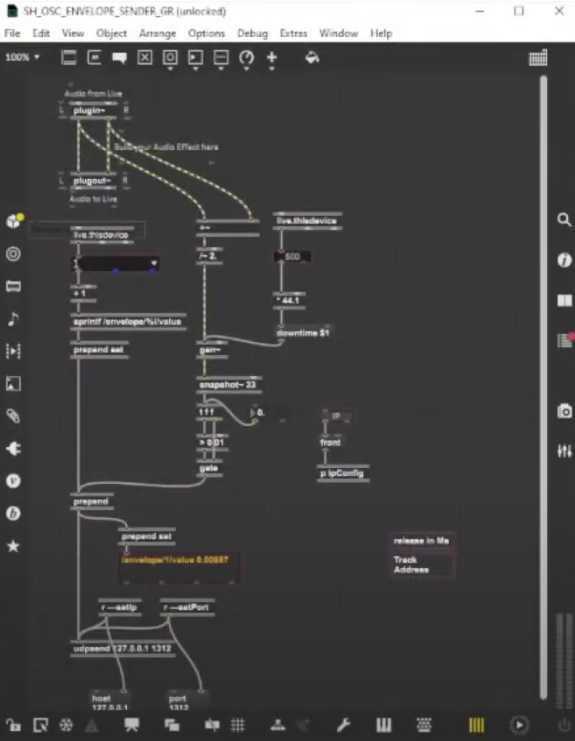

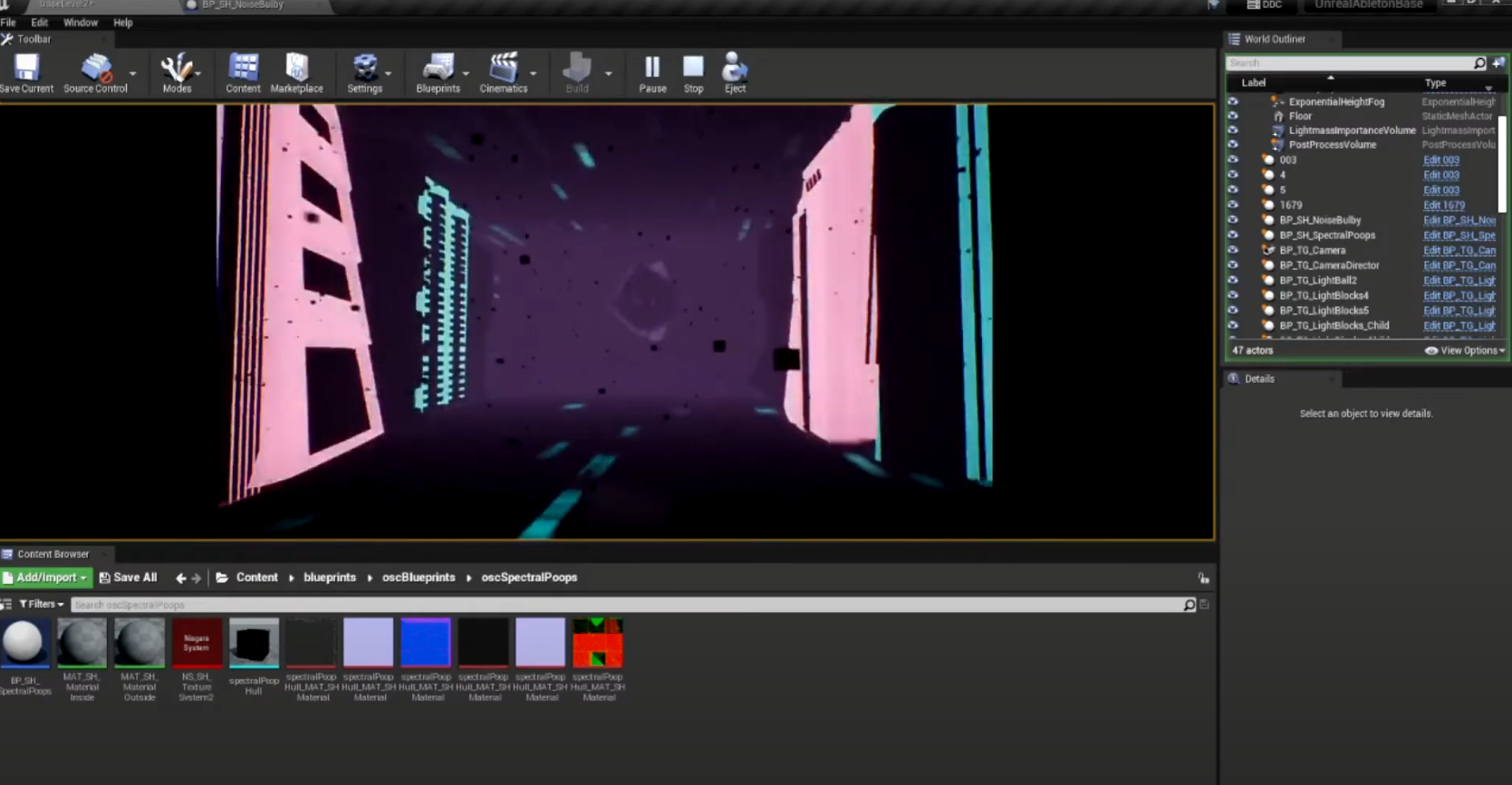

The audio aspects of the experience were developed using Ableton, with each track running separately to respond to different elements. The two interactive elements are the cloud cover wave that fluctuates from voice input with a mic, and the gray sun orb that distorts through midi keyboard hits. The other three elements including the pixel rain drops, building lights, and sky are synced to different elements such as the snare, claps, and base. Upon the completion of the sound design, the tracks are run through envelopes in Max to track frequency levels. Once a certain threshold is hit, Max readings are outputted to the unreal engine where our cyber city was built and where the programs combine to form the final experience.